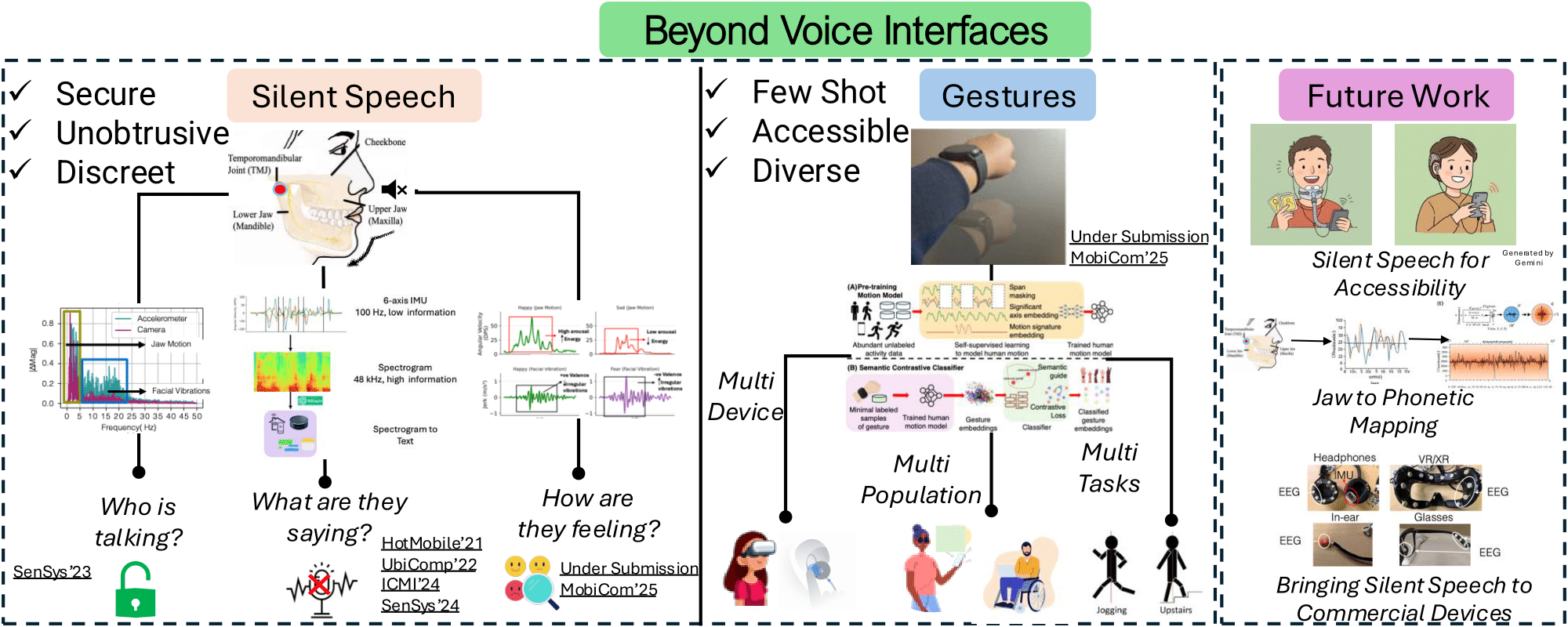

Tanmay’s research vision centers on creating intuitive, privacy-preserving, and accessible interaction methods for next-generation computing devices. As computing becomes embedded in daily life through earables, smart glasses, and AR/VR headsets, speech has emerged as the de facto interaction method due to its speed and ease of use. However, voice interfaces have limitations in noisy environments, raise privacy concerns, and remain inaccessible to those with speech impairments. These challenges are magnified in wearable technologies where traditional inputs are constrained. Tanmay’s work addresses these issues through "beyond-voice" interaction methods: silent speech interfaces and gesture-based interactions. He focuses on unobtrusive sensing locations, capturing subtle jaw movements through ear-worn sensors to create robust interaction systems for diverse applications and users. His gesture recognition framework extends this silent speech work by creating a generalizable human motion model that works across different devices with minimal training data. His research rests on three principles: (1) ensuring private interactions through speech-like interfaces, (2) developing systems easily integrated into commercial devices, and (3) enabling universal interaction methods accessible to diverse users. Through this approach, Tanmay aims to make digital platforms more inclusive and adaptable to individual needs.