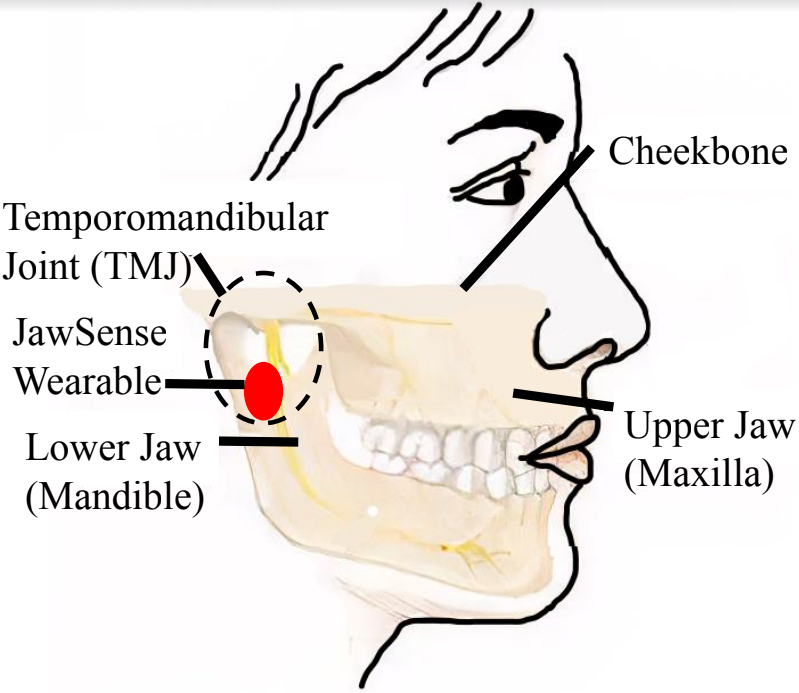

JawSense explores a new wearable system enabling a novel form of human-computer interaction based on unvoiced jaw movement tracking. JawSense allows its user to interact with computing machines just by moving their jaw. We study the neurological and anatomical structure of the human cheek and jaw to design JawSense so that jaw movement can be reliably captured under the strong impact of noises from human artifacts. In particular, JawSense senses the muscle deformation and vibration caused by unvoiced speaking to decode the unvoiced phonemes spoken by the user. We model the relationship between jaw movements and phonemes to develop a classification algorithm to recognize unvoiced sounds.

Currently, we are extending this work so that we can recognize unvoiced commands by analyzing the motion of the jaw along with the skin vibration and deformation.